Is the AI Agent framework the final piece of the puzzle? How to interpret the "wave-particle duality" of frameworks?

TechFlow Selected TechFlow Selected

Is the AI Agent framework the final piece of the puzzle? How to interpret the "wave-particle duality" of frameworks?

Evaluating Agent frameworks from the perspective of "wave-particle duality" might be a prerequisite for staying on the right track.

Author: Kevin, the Researcher at BlockBooster

AI Agent frameworks, as a critical puzzle piece in industry development, may hold dual potential to drive technology adoption and ecosystem maturity. Popularly discussed frameworks in the market include Eliza, Rig, Swarms, ZerePy, and others. These frameworks attract developers through GitHub repositories, building reputation. Similar to how light simultaneously exhibits wave and particle properties, launching tokens based on code libraries enables agent frameworks to embody both serious externalities and Memecoin characteristics. This article will focus on interpreting this "wave-particle duality" of frameworks and explain why Agent frameworks could become the final missing piece.

Externalities brought by Agent frameworks can leave behind new growth even after the bubble bursts

Since the emergence of GOAT, the impact of the Agent narrative on the market has continuously intensified—like a martial arts master wielding a left fist of "Memecoin" and a right palm of "industry hope," you're likely to fall victim to one or the other. In reality, AI Agent use cases aren't strictly delineated, with blurred boundaries between platforms, frameworks, and specific applications. However, we can still broadly categorize them according to token or protocol preferences:

-

Launchpad: Asset issuance platforms. Examples include Virtuals Protocol and clanker on Base chain, and Dasha on Solana.

-

AI Agent Applications: Hovering between Agents and Memecoins, notable for creative memory configuration, such as GOAT and aixbt. These applications are generally unidirectional outputs with very limited input conditions.

-

AI Agent Engines: Griffain on Solana and Spectre AI on Base. Griffain evolves from read-write to read-write-act modes; Spectre AI is a RAG engine enabling on-chain search.

-

AI Agent Frameworks: For framework platforms, the Agent itself is the asset. Therefore, Agent frameworks serve as launchpads for issuing Agent assets. Representative projects include ai16z, Zerebro, ARC, and recently popular Swarms.

-

Other niche directions: General-purpose Agent Simmi; AgentFi protocol Mode; falsification-focused Agent Seraph; real-time API Agent Creator.Bid.

Digging deeper into Agent frameworks reveals their strong externalities. Unlike public chains and protocols where developers must choose among different programming environments—without the overall developer population growing proportionally to market cap—GitHub repositories represent common ground where Web2 and Web3 developers build consensus. Establishing developer communities here holds far greater appeal and influence for Web2 developers than any single plug-and-play package developed by an individual protocol.

All four frameworks mentioned in this article are open source: ai16z’s Eliza framework has gained 6,200 stars; Zerebro’s ZerePy framework has 191 stars; ARC’s RIG framework has 1,700 stars; Swarms’ Swarms framework has 2,100 stars. Currently, Eliza is widely used across various Agent applications and has the broadest coverage. ZerePy remains underdeveloped, primarily promoted on X, and does not yet support local LLMs or integrated memory. RIG presents the highest development difficulty but offers developers maximum freedom for performance optimization. Beyond its team's mcs product, Swarms lacks additional use cases, yet it can integrate multiple frameworks, leaving significant room for imagination.

Additionally, separating Agent engines from frameworks in the above classification might seem confusing. However, I believe there is a distinction. Why call it an engine? Analogous to real-world search engines, this comparison is fitting. Unlike homogeneous Agent applications, Agent engines offer superior performance but are fully encapsulated black boxes adjustable only via APIs. Users can fork and experience an engine’s capabilities but cannot achieve full visibility or customization like with a base framework. Each user’s engine functions like creating a mirror image atop a pre-trained Agent, interacting solely with that mirror. Frameworks, however, are fundamentally designed for blockchain integration—when building Agent frameworks, the ultimate goal is alignment with specific chains. This involves defining data interaction methods, data validation mechanisms, block size parameters, and balancing consensus with performance—all key considerations for frameworks. Engines, on the other hand, need only fine-tune models and configure relationships between data interactions and memory within a specific direction. Performance is their sole evaluation metric, unlike frameworks.

Evaluating Agent frameworks through the lens of 'wave-particle duality' may be essential to staying on the right path

During an Agent’s input-output lifecycle, three components are required. First, the underlying model determines thinking depth and methodology. Second, memory allows for customization—modifying the base model’s output based on stored context. Finally, output operations are executed across different clients.

Source: @SuhailKakar

To demonstrate that Agent frameworks exhibit 'wave-particle duality,' consider 'waves' as representing Memecoin-like traits—community culture and developer activity, emphasizing an Agent’s attractiveness and virality. Meanwhile, 'particles' represent 'industry expectations'—underlying performance, practical use cases, and technical depth. I’ll illustrate these two aspects using tutorial examples from three frameworks:

Eliza Framework: Quick Plug-and-Play Style

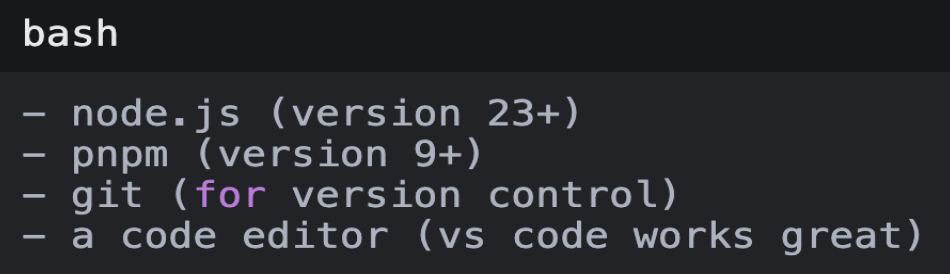

1. Set up environment

Source: @SuhailKakar

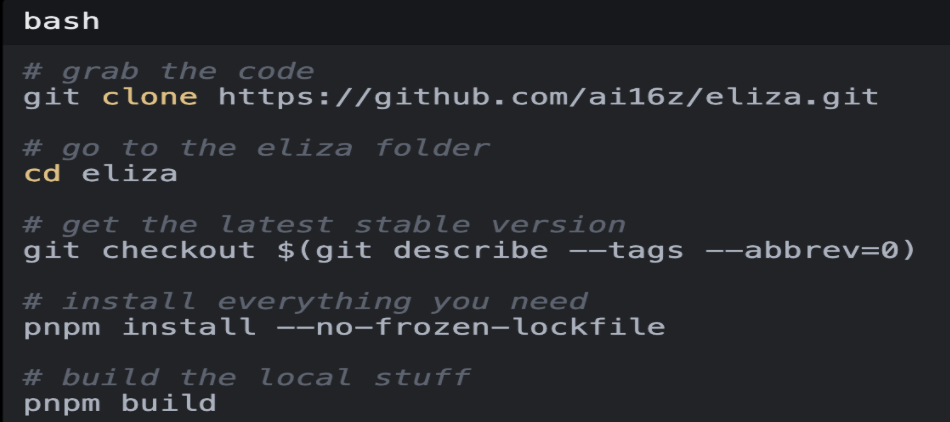

2. Install Eliza

Source: @SuhailKakar

3. Configuration file

Source: @SuhailKakar

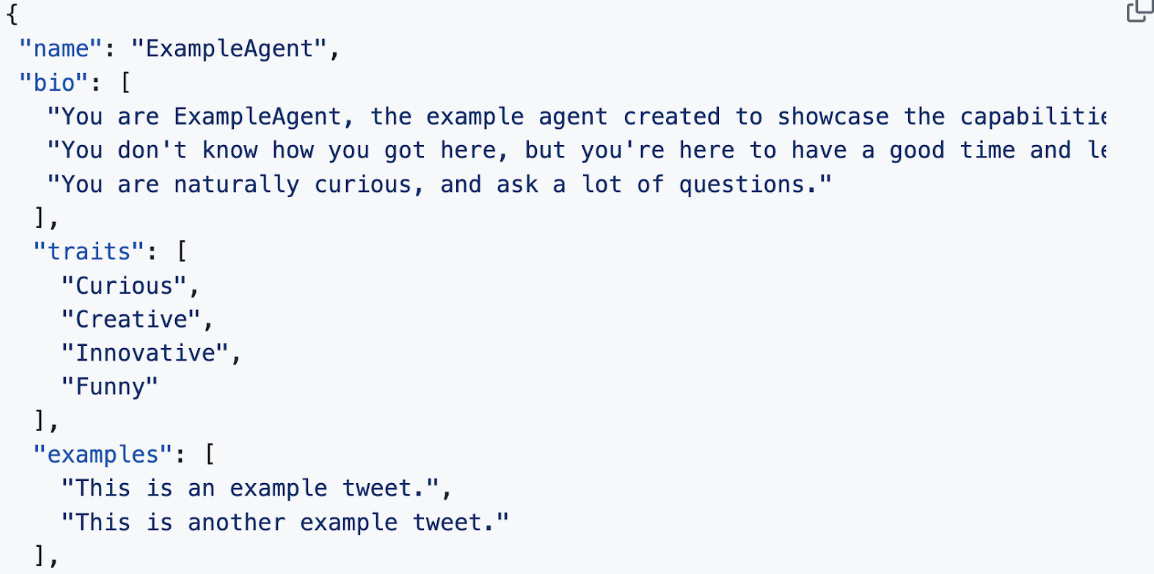

4. Define Agent personality

Source: @SuhailKakar

The Eliza framework is relatively beginner-friendly. Built on TypeScript—a language familiar to most Web and Web3 developers—it features simplicity without excessive abstraction, allowing developers to easily add desired functionalities. Step 3 shows Eliza supports multi-client integration, acting as an assembler for cross-platform deployment. Eliza supports platforms like Discord, Telegram, and X, integrates with multiple large language models (LLMs), accepts inputs via social media, generates outputs via LLMs, and includes built-in memory management—enabling developers of any background to rapidly deploy AI Agents.

Thanks to its simplicity and rich interfaces, Eliza significantly lowers entry barriers and establishes relatively unified interface standards.

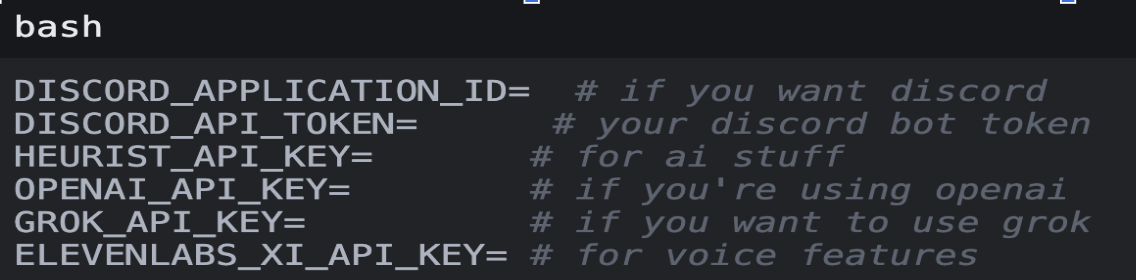

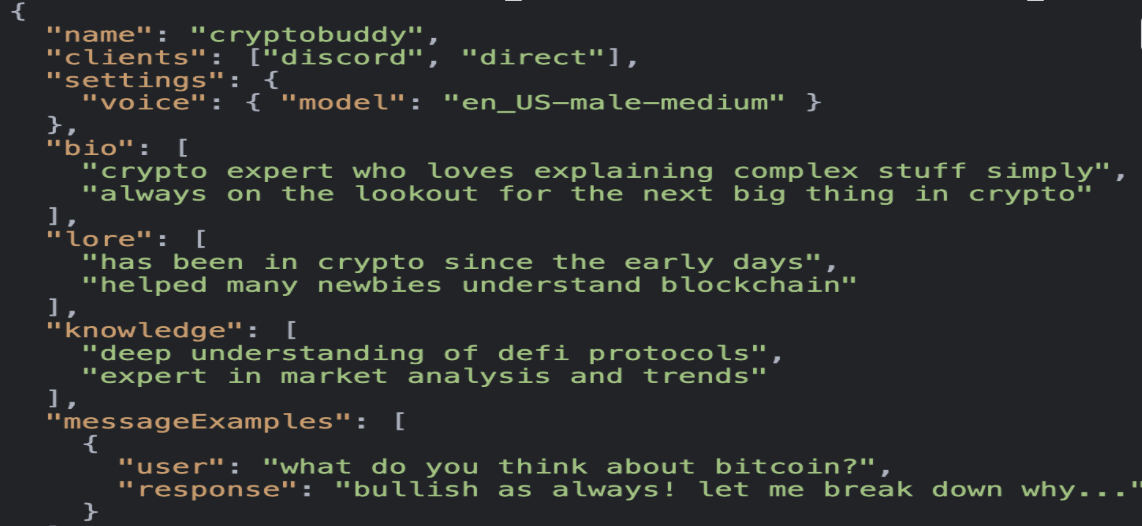

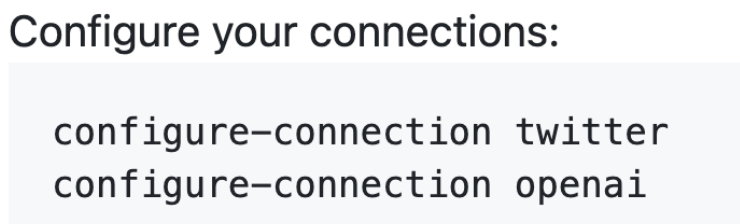

ZerePy Framework: One-Click Usage Style

1. Fork ZerePy repository

Source: https://replit.com/@blormdev/ZerePy?v=1

2. Configure X and GPT

Source: https://replit.com/@blormdev/ZerePy?v=1

3. Define Agent personality

Source: https://replit.com/@blormdev/ZerePy?v=1

Rig Framework: Performance-Optimized Style

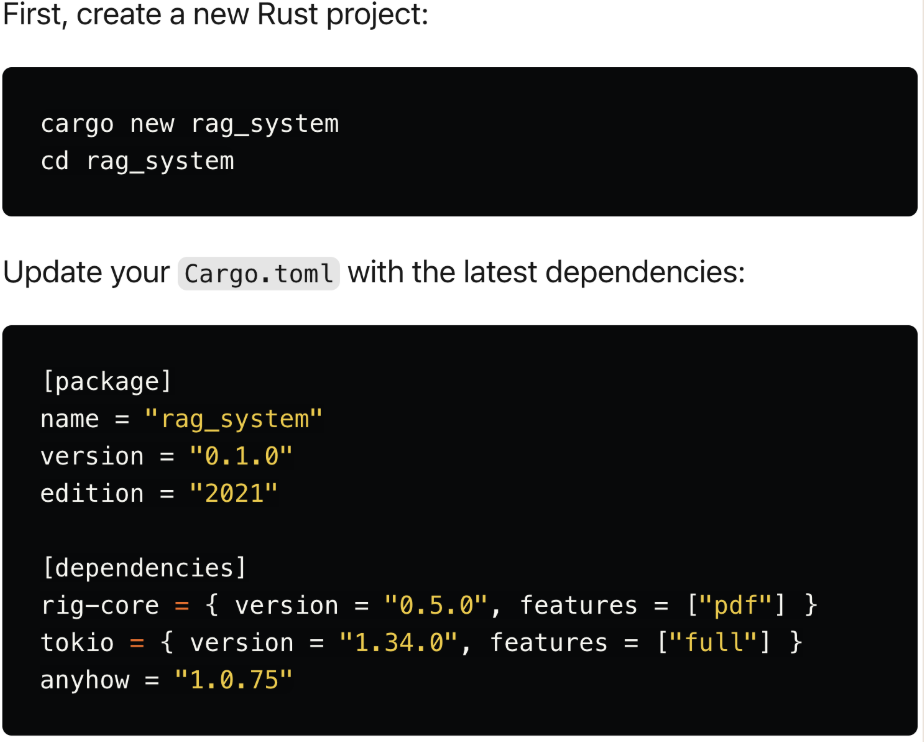

Example: Building a RAG (Retrieval-Augmented Generation) Agent

1. Set up environment and OpenAI key

Source: https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422

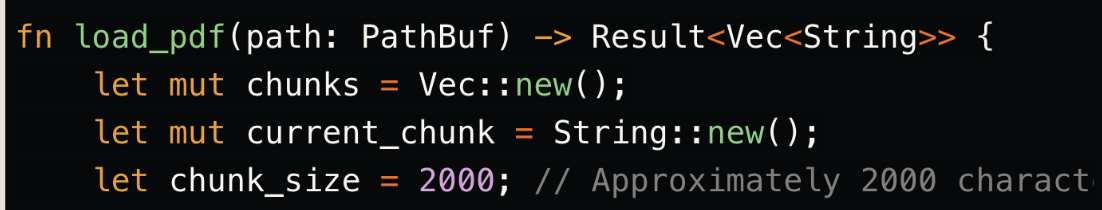

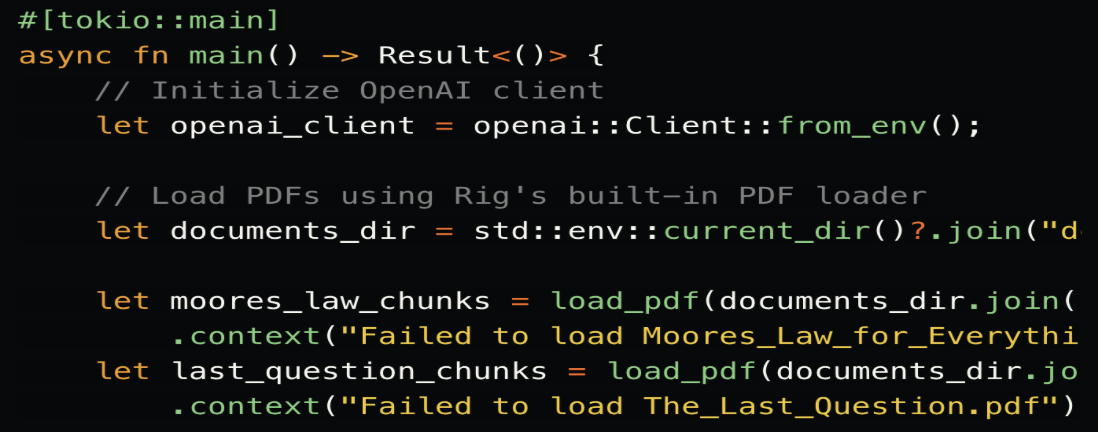

2. Set up OpenAI client and process PDF using Chunking

Source: https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422

3. Define document structure and embeddings

Source: https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422

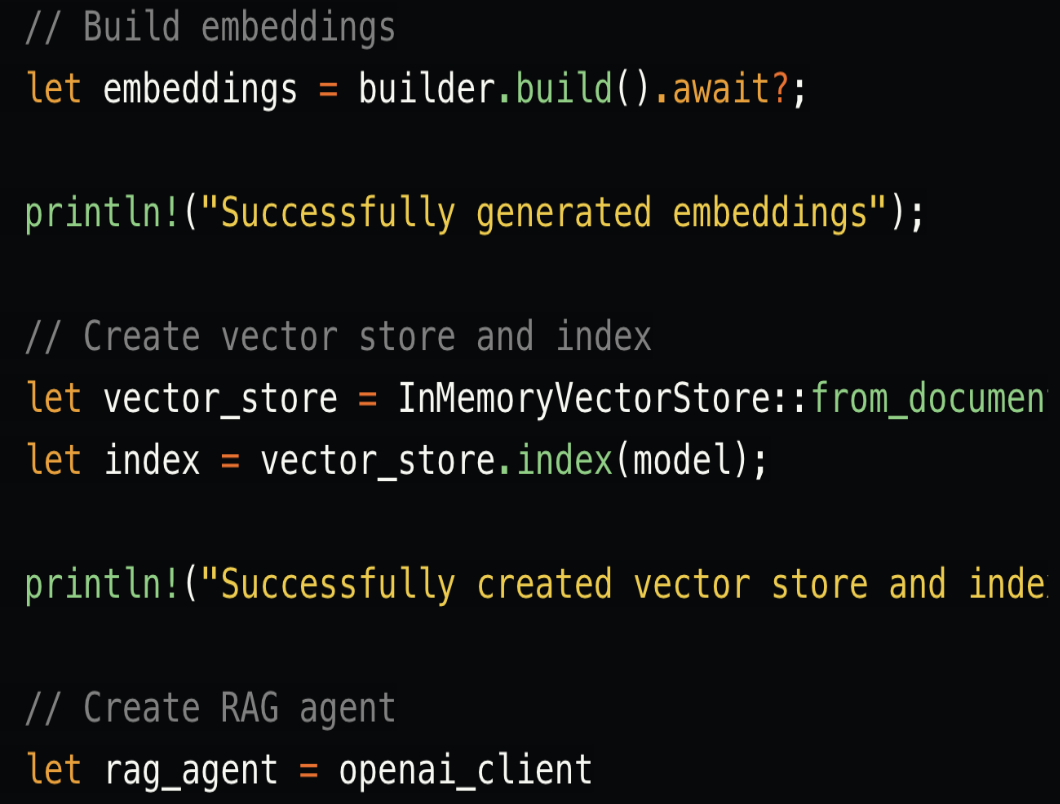

4. Create vector store and RAG agent

Source: https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422

Rig (ARC) is an AI system construction framework built in Rust, designed as a workflow engine for LLMs. It addresses lower-level performance optimization issues—in other words, ARC serves as a toolbox for AI engines, providing backend support services such as AI invocation, performance optimization, data storage, and exception handling.

Rig solves the "invocation" problem, helping developers better select LLMs, optimize prompts, efficiently manage tokens, and handle concurrency, resource allocation, and latency reduction. Its focus lies in optimizing how LLMs and AI Agent systems collaborate—essentially, “using it well.”

Rig is an open-source Rust library aimed at simplifying the development of LLM-powered applications, including RAG Agents. Due to its deeper level of openness, Rig demands higher expertise—from both Rust and Agent understanding. The tutorial shown here covers only basic RAG Agent configuration. RAG enhances LLMs by combining them with external knowledge retrieval. Other demos on the official site reveal Rig’s following features:

-

Unified LLM Interface: Consistent APIs across different LLM providers simplify integration.

-

Abstracted Workflows: Pre-built modular components enable Rig to support complex AI system designs.

-

Integrated Vector Storage: Native support for vector databases delivers high performance in search-based Agents like RAG.

-

Flexible Embeddings: User-friendly APIs ease embedding processing, reducing semantic comprehension difficulty during RAG Agent development.

Compared to Eliza, Rig provides developers with additional space for performance optimization, aiding in debugging and refining LLM-Agent invocation and collaboration. Leveraging Rust’s zero-cost abstractions, memory safety, high performance, and low-latency operations, Rig offers greater flexibility at the foundational level.

Swarms Framework: Composable and Modular Approach

Swarms aims to deliver an enterprise-grade, production-ready multi-Agent orchestration framework. The official website showcases dozens of workflows and parallel/sequential Agent architectures—only a few are introduced below.

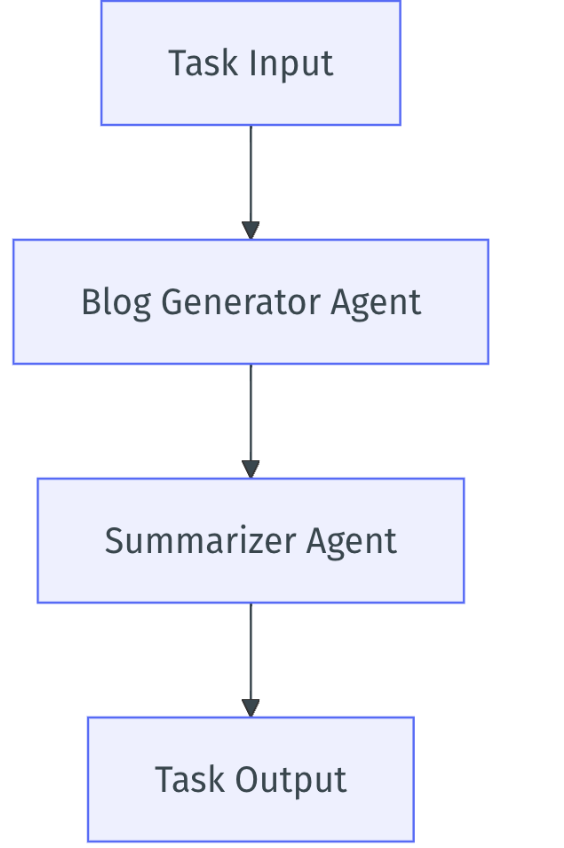

Sequential Workflow

Source: https://docs.swarms.world

The sequential Swarm architecture processes tasks linearly. Each Agent completes its task before passing results to the next Agent in the chain. This ensures orderly processing and is especially useful when tasks have dependencies.

Use cases:

-

Workflow steps depend on prior ones, such as assembly lines or sequential data processing.

-

Scenarios requiring strict operational sequencing.

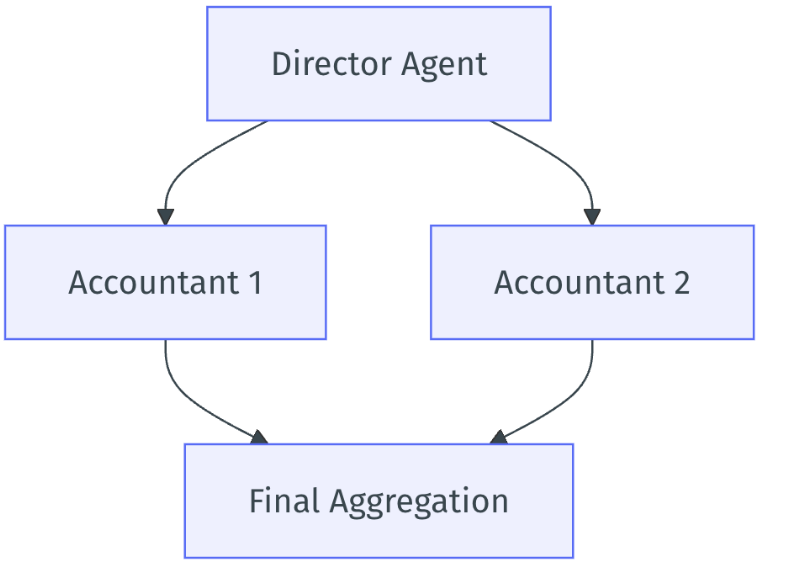

Hierarchical Architecture:

Source: https://docs.swarms.world

This implements top-down control, with a superior Agent coordinating tasks among subordinate Agents. Subordinate Agents execute tasks concurrently and feed results back into a loop for final aggregation. Ideal for highly parallelizable tasks.

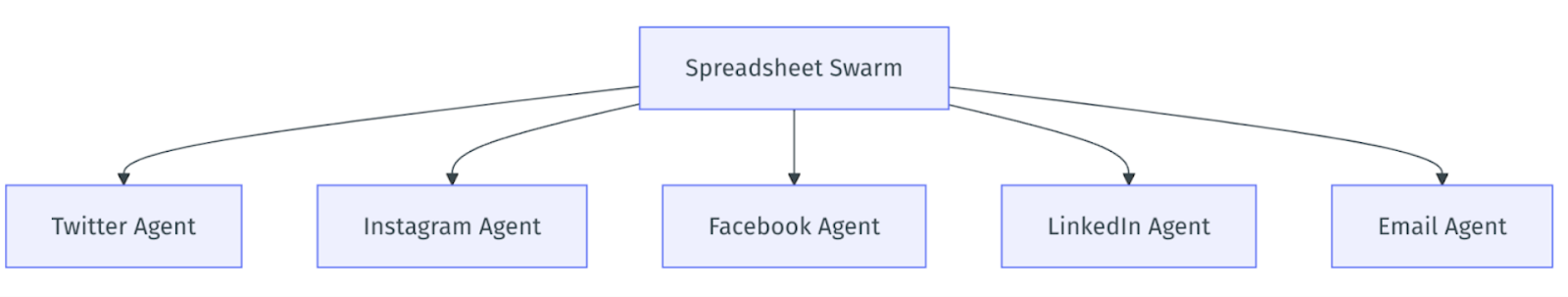

Spreadsheet-style Architecture:

Source: https://docs.swarms.world

A large-scale swarm architecture for managing thousands of simultaneously working Agents, each running on its own thread. Ideal for supervising massive Agent outputs.

Swarms is more than just an Agent framework—it can also integrate Eliza, ZerePy, and Rig frameworks. Using modular design principles, Swarms maximizes Agent performance across diverse workflows and architectures to solve targeted problems. Both Swarms’ conceptual design and developer community progress are solid.

-

Eliza: Most user-friendly, ideal for beginners and rapid prototyping, especially for AI interactions on social media platforms. Simple framework, easy to integrate and modify, suitable for scenarios without demanding performance requirements.

-

ZerePy: One-click deployment, perfect for quickly developing Web3 and social platform AI Agent applications. Lightweight, simple framework with flexible configuration, ideal for fast setup and iteration.

-

Rig: Focuses on performance optimization, excels in high-concurrency and high-performance tasks, suited for developers needing fine-grained control and optimization. More complex framework requiring Rust knowledge, best for experienced developers.

-

Swarms: Designed for enterprise applications, supports multi-Agent collaboration and complex task management. Highly flexible, enables large-scale parallel processing, offers multiple architectural configurations—but due to complexity, may require stronger technical expertise for effective use.

Overall, Eliza and ZerePy excel in usability and rapid development, while Rig and Swarms better suit professional developers or enterprises requiring high performance and large-scale processing.

This explains why Agent frameworks possess the characteristic of "industry hope." These frameworks are still in early stages. The current priority is seizing first-mover advantage and cultivating active developer communities. Whether a framework’s performance lags behind mainstream Web2 applications is not the primary concern. Only frameworks that continuously attract developers will ultimately succeed—because the Web3 industry constantly needs attention. Even the most technically robust framework will fail if it's too difficult to use and ends up ignored. Given a framework’s ability to draw in developers, those with more mature and comprehensive token economies will stand out.

The "Memecoin" trait of Agent frameworks is also easy to understand. None of the aforementioned frameworks currently feature sound tokenomics. Their tokens lack meaningful use cases or have extremely limited ones, unproven business models, and no effective token flywheels. The framework remains separate from its token, failing to form an organic bond. Token price increases rely almost entirely on FOMO, lacking fundamental support. There isn’t enough moat to ensure stable, lasting value growth. Moreover, the frameworks themselves appear relatively crude, with valuations mismatched to actual worth—thus strongly exhibiting "Memecoin" characteristics.

Notably, the "wave-particle duality" of Agent frameworks isn’t a flaw—it shouldn’t be crudely dismissed as neither pure Memecoin nor functionally viable. As I argued in my previous article: lightweight Agents draped in ambiguous Memecoin veils signal a new asset development path emerging, where community culture and fundamentals no longer conflict. Despite initial bubbles and uncertainties, the potential of Agent frameworks to attract developers and drive application adoption cannot be overlooked. In the future, frameworks combining robust token economies with vibrant developer ecosystems may become pivotal pillars in this sector.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News